Event Driven Game Server

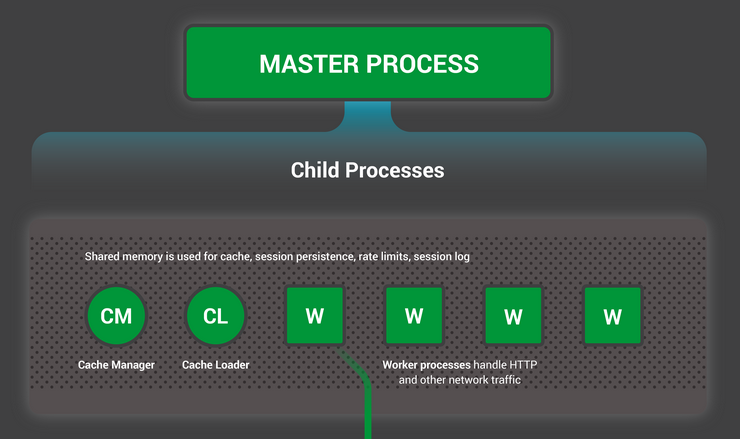

Select() Up: Background Previous: Background. Event-driven servers. An event-driven server typically has a single thread which manages all connections to the server.

October 06, 2017 at 05:48 Tags, This is part 3 of a series of posts on writing concurrent network servers. Introduced the series with some building blocks, and discussed multiple threads as one viable approach for concurrency in the server. Another common approach to achieve concurrency is called event-driven programming, or alternatively asynchronous programming. The range of variations on this approach is very large, so we're going to start by covering the basics - using some of the fundamental APIs than form the base of most higher-level approaches.

Future posts in the series will cover higher-level abstractions, as well as various hybrid approaches. All posts in the series:. Nonblocking I/O As an introduction to the topic, let's talk about the difference between blocking and nonblocking I/O. Blocking I/O is easier to undestand, since this is the 'normal' way we're used to I/O APIs working. While receiving data from a socket, a call to recv blocks until some data is received from the peer connected to the other side of the socket. This is precisely the issue with the sequential server of part 1.

So blocking I/O has an inherent performance problem. We saw one way to tackle this problem in part 2, using multiple threads. As long as one thread is blocked on I/O, other threads can continue using the CPU. In fact, blocking I/O is usually very efficient on resource usage while the thread is waiting - the thread is put to sleep by the OS and only wakes up when whatever it was waiting for is available.

Nonblocking I/O is a different approach. When a socket is set to nonblocking mode, a call to recv (and to send, but let's just focus on receiving here) will always return very quickly, even if there's no data to receive. In this case, it will return a special error status notifying the caller that there's no data to receive at this time.

The caller can then go do something else, or try to call recv again. The difference between blocking and nonblocking recv is easiest to demonstrate with a simple code sample.

Here's a small program that listens on a socket, continuously blocking on recv; when recv returns data, the program just reports how many bytes were received. $./nonblocking-listener 9988 Listening on port 9988 peer (localhost, 37288) connected Calling recv. Calling recv. Calling recv. Calling recv. Calling recv.

Calling recv. Calling recv. Calling recv.

Calling recv. Recv returned 6 bytes Calling recv. Calling recv. Calling recv. Calling recv. Calling recv.

Calling recv. Calling recv. Calling recv. Calling recv. Calling recv. Calling recv.

Recv returned 13 bytes Calling recv. Calling recv. Calling recv. Peer disconnected; I'm done. As an exercise, add a timestamp to the printouts and convince yourself that the total time elapsed between fruitful calls to recv is more or less the delay in typing the lines into nc (rounded to the next 200 ms). So there we have it - using nonblocking recv makes it possible for the listener the check in with the socket, and regain control if no data is available yet.

Another word to describe this in the domain of programming is polling - the main program periodically polls the socket for its readiness. It may seem like a potential solution to the sequential serving issue. Nonblocking recv makes it possible to work with multiple sockets simulatenously, polling them for data and only handling those that have new data.

This is true - concurrent servers could be written this way; but in reality they don't, because the polling approach scales very poorly. First, the 200 ms delay I introduced in the code above is nice for the demonstration (the listener prints only a few lines of 'Calling recv.' Between my typing into nc as opposed to thousands), but it also incurs a delay of up to 200 ms to the server's response time, which is almost certainly undesirable. In real programs the delay would have to be much shorter, and the shorter the sleep, the more CPU the process consumes. These are cycles consumed for just waiting, which isn't great, especially on mobile devices where power matters. But the bigger problem happens when we actually have to work with multiple sockets this way. Imagine this listener is handling 1000 clients concurrently.

This means that in every loop iteration, it has to do a nonblocking recv on each and every one of those 1000 sockets, looking for one which has data ready. This is terribly inefficient, and severely limits the number of clients this server can handle concurrently. There's a catch-22 here: the longer we wait between polls, the less responsive the server is; the shorter we wait, the more CPU resources we burn on useless polling. Frankly, all this polling also feels like useless work. Surely somewhere in the OS it is known which socket is actually ready with data, so we don't have to scan all of them. Indeed, it is, and the rest of this post will showcase a couple of APIs that let us handle multiple clients much more gracefully. Select The select system call is a portable (POSIX), venerable part of the standard Unix API.

It was designed precisely for the problem described towards the end of the previous section - to allow a single thread to 'watch' a non-trivial number of file descriptors for changes, without needlessly spinning in a polling loop. I don't plan to include a comprehensive tutorial for select in this post - there are many websites and book chapters for that - but I will describe its API in the context of the problem we're trying to solve, and will present a fairly complete example. Trakaxpc keygen torrent. Select enables I/O multiplexing - monitoring multiple file descriptors to see if I/O is possible on any of them. Int select ( int nfds, fdset.

readfds, fdset. writefds, fdset.

exceptfds, struct timeval. timeout ); readfds points to a buffer of file descriptors we're watching for read events; fdset is an opaque data structure users manipulate using FD. macros. Writefds is the same for write events. Nfds is the highest file descriptor number (file descriptors are just integers) in the watched buffers. Timeout lets the user specify how long select should block waiting for one of the file descriptors to be ready ( timeout NULL means block indefinitely). I'll ignore exceptfds for now.

The contract of calling select is as follows:. Prior to the call, the user has to create fdset instances for all the different kinds of descriptors to watch.

If we want to watch for both read events and write events, both readfds and writefds should be created and populated. The user uses FDSET to set specific descriptors to watch in the set. For example, if we want to watch descriptors 2, 7 and 10 for read events, we call FDSET three times on readfds, once for each of 2, 7 and 10. select is called. When select returns (let's ignore timeouts for now), it says how many descriptors in the sets passed to it are ready. It also modifies the readfds and writefds sets to mark only those descriptors that are ready.

All the other descriptors are cleared. At this point the user has to iterate over readfds and writefds to find which descriptors are ready (using FDISSET). As a complete example, I've reimplemented our protocol in a concurrent server that uses select. The; what follows is some highlights from the code, with explanations. Warning: this code sample is fairly substantial - so feel free to skip it on first reading if you're short on time. A concurrent server using select Using an I/O multiplexing API like select imposes certain constraints on the design of our server; these may not be immediately obvious, but are worth discussing since they are key to understanding what event-driven programming is all about. Most importantly, always keep in mind that such an approach is, in its core, single-threaded.

The server really is just doing one thing at a time. Since we want to handle multiple clients concurrently, we'll have to structure the code in an unusual way. First, let's talk about the main loop. How would that look? To answer this question let's imagine our server during a flurry of activity - what should it watch for? Two kinds of socket activities:. New clients trying to connect.

These clients should be accept-ed. Existing client sending data. This data has to go through the usual protocol described in, with perhaps some data being sent back. Even though these two activities are somewhat different in nature, we'll have to mix them into the same loop, because there can only be one main loop. Our loop will revolve around calls to select. This select call will watch for the two kinds of events described above.

Here's the part of the code that sets up the file descriptor sets and kicks off the main loop with a call to select. $ python3.6 simple-client.py -n 3 localhost 9090 INFO:2017-09-26 05:29:15,864:conn1 connected. INFO:2017-09-26 05:29:15,864:conn2 connected. INFO:2017-09-26 05:29:15,864:conn0 connected. Synchronous, asynchronous, event-driven, callback-based The select-server code sample provides a good background for discussing just what is meant by 'asynchronous' programming, and how it relates to event-driven and callback-based programming, because all these terms are common in the (rather inconsistent) discussion of concurrent servers. Let's start with a quote from select's man page: select, pselect, FDCLR, FDISSET, FDSET, FDZERO - synchronous I/O multiplexing So select is for synchronous multiplexing. But I've just presented a substantial code sample using select as an example of an asynchronous server; what gives?

The answer is: it depends on your point of view. Synchronous is often used as a synonym for blocking, and the calls to select are, indeed, blocking. So are the calls to send and recv in the sequential and threaded servers presented in parts 1 and 2. So it is fair to say that select is a synchronous API. However, the server design emerging from the use of select is actually asynchronous, or callback-based, or event-driven. Note that the onpeer. functions presented in this post are callbacks; they should never block, and they get invoked due to network events.

They can get partial data, and are expected to retain coherent state in-between invocations. If you've done any amont of GUI programming in the past, all of this is very familiar.

There's an 'event loop' that's often entirely hidden in frameworks, and the application's 'business logic' is built out of callbacks that get invoked by the event loop due to various events - user mouse clicks, menu selections, timers firing, data arriving on sockets, etc. The most ubiquitous model of programming these days is, of course, client-side Javascript, which is written as a bunch of callbacks invoked by user activity on a web page.

The limitations of select Using select for our first example of an asynchronous server makes sense to present the concept, and also because select is such an ubiquitous and portable API. But it also has some significant limitations that manifest when the number of watched file descriptors is very large:.

Limited file descriptor set size. Bad performance.

Event Driven Framework

Let's start with the file descriptor size. FDSETSIZE is a compile-time constant that's usually equal to 1024 on modern systems. It's hard-coded deep in the guts of glibc, and isn't easy to modify.

It limits the number of file descriptors a select call can watch to 1024. These days folks want to write servers that handle 10s of thousands of concurrent clients and more, so this problem is real. There are workarounds, but they aren't portable and aren't easy. The bad performance issue is a bit more subtle, but still very serious.

Note that when select returns, the information it provides to the caller is the number of 'ready' descriptors, and the updated descriptor sets. The descriptor sets map from desrciptor to 'ready/not ready' but they don't provide a way to iterate over all the ready descriptors efficiently.

If there's only a single descriptor that is ready in the set, in the worst case the caller has to iterate over the entire set to find it. This works OK when the number of descriptors watched is small, but if it gets to high numbers this overhead starts hurting.

For these reasons select has recently fallen out of favor for writing high-performance concurrent servers. Every popular OS has its own, non-portable APIs that permit users to write much more performant event loops; higher-level interfaces like frameworks and high-level languages usually wrap these APIs in a single portable interface. Epoll As an example, let's look at epoll, Linux's solution to the high-volume I/O event notification problem. The key to epoll's efficiency is greater cooperation from the kernel. Instead of using a file descriptor set, epollwait fills a buffer with events that are currently ready. Only the ready events are added to the buffer, so there is no need to iterate over all the currently watched file descriptors in the client. This changes the process of discovering which descriptors are ready from O(N) in select's case to O(1).

A full presentation of the epoll API is not the goal here - there are plenty of online resources for that. As you may have guessed, though, I am going to write yet another version of our concurrent server - this time using epoll instead of select. The full code sample. In fact, since the vast majority of the code is the same as select-server, I'll only focus on the novelty - the use of epoll in the main loop.

I'm thinking about coming up with a turn based, possibly multiplayer game, except that I'm not sure what the architecture between the game and the players would be like. This is partly because the interface/client will be using Java Swing.

Since Swing is event driven, GUI interaction is asynchronous. This seems hard to mesh with the synchronous nature of a turn based game.

Here's the idea I had. There'd be a main game model or server. Associated with the game are one or more players where some are human while others are AIs. The game lets each player, one at a time, to take a turn.

'Taking a turn' is defined as performing one or more atomic actions that affects the simulated world, such as moving or using items. The game has control over what order the players are given chances to act. That is, each player has a 'speed' value; faster players act first or get more consecutive turns). The turns should pass fairly quickly; if there's only one human player with a bunch of AI players, he should not notice too much time between his own turns (except for animations, alerts, etc.). This makes me imagine the game server as running in a while loop where in each pass it chooses a player and calls its 'take turn' method, assuming I got networking abstracted away. However, as I've said the client will be done in Java Swing.

I have experience with Swing, but I'm not sure how to make them work for the game client. Since the interface is event driven while the game itself is turn based, I have to find a way to make the game server 'pause' when it's a human player's turn. The game loop has to be put to sleep somehow when it's a human's turn, then woken back up when the player has given valid input.

While the game is waiting, the human player should be able to use other GUI items in the client that do not take a turn such as checking his inventory or message log. I have the impression that the game would run in a separate thread (that's a given if I actually put in multiplay). I'm not sure about an elegant way to make the game wait for a human player, except for maybe entering another loop until the player triggers some event in the GUI that take the turn.

This sounds like a common issue with games, so is there a standard way of designing this kind of architecture? First, the server can be either synchronous or asynchronous, depending on whether or not the AI needs to do any 'thinking' while the game waits for the player to make his turn, and also on whether any other player may have need to send/receive data from the server. Generally what happens is the main game loop polls the different player connections to determine if any input has been received. Since it's a turn-based strategy only the currently active player can send 'move' actions. While other players may still send commands such as 'GetInventory', etc.If a player does submit move actions, the Server can just check to see if that was the currently selected player.

If not, it can either silently ignore the instructions or even drop the player from the game, as he's likely cheating. (we'll see why in a moment) So at any rate, your game loop on the server goes through a more or less infinite game update cycles where it receives input from network connection, processes it, and then sends results. It can also do any AI thinking, or other utility operations it needs each cycle. If it polls for input and none was received and there are not AI 'thoughts' which need to be processed, the loop starts over. As for the client, they can take as long as they like to click on their move/attack buttons, as the server is quietly looping, receiving input from all players and performing its own logic. When the player makes a move, that is sent to the game server which processes the input, and updates everyone's game state. It also sends a notification to the player who was the active player and informs him he's no longer the active player.

The client then disables all controls which are not allowed to be pressed while not active. (This is why the server shouldn't be receiving move/attack messages from the non-active player. The client can even be responsible for disabling its interface elements when a move action is made, without waiting for the server to notify it of a successful action.) Next, the server sends a notification to the new active player. This then, enables all controls, and the client UI sits there patiently waiting for the user to 'do' something, and the server goes back to waiting for input from the clients and processing AI strategy. Hope this helps!